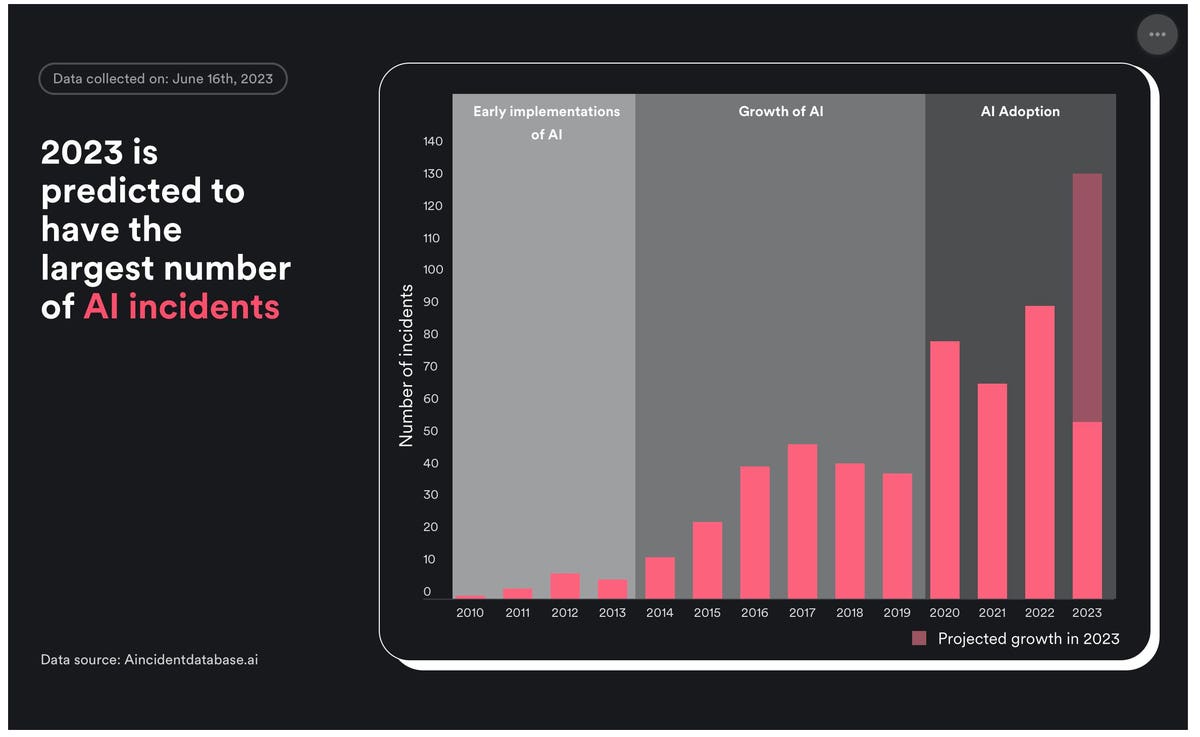

AI incidents per year since 2010. 2023 is projected to significantly outpace 2022.

Surfshark

The first “AI incident” almost caused global nuclear war. More recent AI-enabled malfunctions, errors, fraud, and scams include deepfakes used to influence politics, bad health information from chatbots, and self-driving vehicles that are endangering pedestrians.

Companies involved in a significant percentage of AI incidents, according to Surfshark.

Surfshark

The worst offenders, according to security company Surfshark , are Tesla, Facebook, and OpenAI, with 24.5% of all known AI incidents so far.

In 1983, an automated system in the Soviet Union thought it detected incoming nuclear missiles from the United States, almost leading to global conflict. That’s the first incident in Surfshark’s report (though it’s debatable whether an automated system from the 1980s counts specifically as artificial intelligence). In the most recent incident, the National Eating Disorders Association (NEDA) was forced to shut down Tessa, its chatbot, after Tessa gave dangerous advice to people seeking help for eating disorders. Other recent incidents include a self-driving Tesla failing to notice a pedestrian breaking the law by failing to yield to a person in a crosswalk, and a Jefferson Parish resident being wrongfully arrested by Louisiana police after a facial recognition system developed by Clearview AI allegedly mistook him for another individual.

According to Surfshark, these AI incidents are growing fast.

That’s not shocking, given the massive increase in investment and use of AI over the past year. According to software review service G2, chatbots have seen a 261% increase in search traffic from February 2022 to February 2023, and the three fastest-growing software products in G2’s entire database are AI products.

And while most people take AI systems with a grain of salt , business executives who buy software seem to trust them.

“Even amidst wide skepticism surrounding AI use, 78% of respondents stated that they trust the accuracy and reliability of AI-powered solutions,” a G2 representative told me, based on a recent survey of 1,700 software buyers.

Despite that trust, the number of AI incidents has jumped from an average of 10/year in the early years of AI—2014 to 2022—to an average of 79 major AI incidents annually in the past few years. That’s a growth rate of 690% in just six years, and it’s accelerating.

As of May, 2023 already had half the number of incidents in all of 2022.

“A recent prominent example is the viral deepfake image of Pope Francis in a white puffer jacket, demonstrating the astonishing realism achievable with AI image generators,” says security company Surfshark spokesperson Elena Babarskaite. “However, the implications go beyond amusement, as AI-enhanced fake news could continue to mislead the public in the future. Other, more severe AI incidents can go as far as enabling racism, violence, or crimes.”

In fact, 83 of the 520 AI incident reports that Surfshark lists used the word “black,” including the wrongful arrest mentioned above and the well-known event in which Facebook’s AI tools labeled black men “primates.”

Some of that bias is being trained out of AI systems, but what’s clear is that with the massive rush to add AI to everything, safety and equity aren’t always key considerations.

As for Facebook, Tesla, and OpenAI accounting for 25% of the recorded AI incidents, their challenges range from Facebook’s algorithms failing to find violent content to scammers using deepfaked images and video on Facebook to defraud people. Tesla’s AI issues are generally focused on the company’s Autopilot and Full Self Driving software products, which can brake unexpectedly (which caused an eight-car accident in San Francisco in late 2022) or fail to notice vehicles and people in front of them. OpenAI’s challenges are generally around privacy of data using in training, as well as the privacy of OpenAI users. OpenAI’s technology has also allegedly made death threats against uses in Bing Search.